(Is it time to learn gRPC?)

I love REST, I think in REST. Truly. It’s been the reliable workhorse of web development for two decades. Simple URLs, JSON payloads you can read in Notepad, and an army of Stack Overflow answers at your fingertips. REST won the web and for good reason.

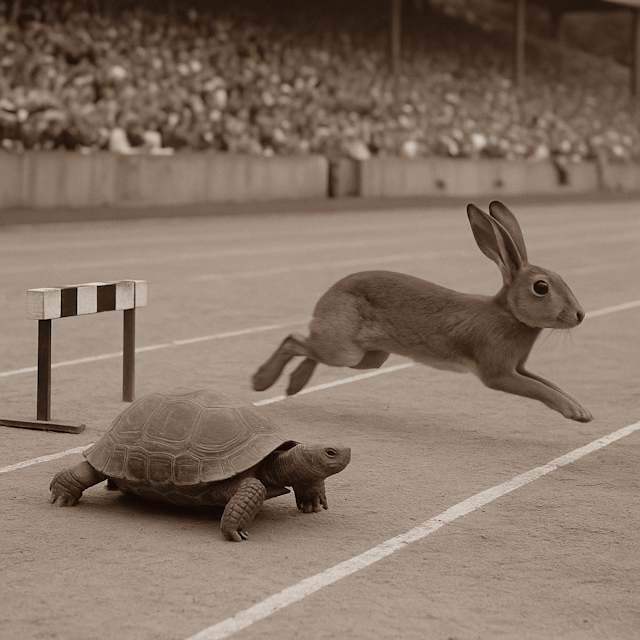

But inside a modern data centre? It’s starting to feel like we’re turning up to a F1 race in a Volvo estate and no-one has ever got a winch driving a Volvo.

REST Made Sense (Once Upon a Time)

REST was perfect for its original context: browsers talking to servers. Human-readable, cache-friendly, and built on top of HTTP, which was already everywhere. You could open Chrome dev tools, watch the network tab, and feel like you understood the world. All was well with the world.

But we’re not in Kansas anymore. Today most of our traffic isn’t browsers calling servers, it’s servers calling servers. Microservices, distributed systems, APIs talking to APIs. And in that world, REST’s strengths start looking like weaknesses:

• Verbose payloads: JSON is lovely to debug but bloated on the wire. • Text everywhere: machines don’t need human-readable when they’re just passing messages at 100k/sec. • No contracts: you get documentation (if you’re lucky), not guarantees. • Latency: HTTP/1.1 with all its headers and handshakes wasn’t designed for millisecond-level chatter.

Enter gRPC

Google built gRPC to solve exactly this. Think of it as REST’s leaner, faster cousin who could teach Lando how to change gears.

• Protobuf, not JSON: compact, binary, and schema-driven. • Contracts baked in: you define your service once and generate client/server stubs in every language under the sun. • Streaming: not just request/response, but long-lived connections, bidirectional streaming, real-time feels. • Performance: less fluff, more stuff ™.

And yes, there’s a learning curve. You’ll need to write .proto files, get used to codegen, and stop thinking everything is a GET or a POST. But honestly? You can get through a “hello world” in an hour, and after that you’ll wonder why you stuck with REST for so long.

Lets face it. your best buddy LLM is writing this anyway so you might as well get performance benefits.

Why Haven’t We All Switched?

Three reasons:

- Inertia. REST is everywhere, and “if it ain’t broke” is a hard mindset to shift.

- Tooling comfort. Curl, Postman, Fiddler(snigger) all make REST feel approachable. gRPC’s binary payloads aren’t as friendly at first glance.

- Learning tax. Engineers have to stop and learn something new, and that’s never free.

But if you’re building anything high-throughput inside the data centre – order management, trading platforms, ML pipelines – REST isn’t just dated, it’s wasteful.

So… Take the Hour

I’m not saying throw away REST everywhere. It’s still great for public APIs, human-facing debugging, and simple CRUD apps. But for internal service-to-service comms? It’s time to ask yourself the question: Why are we still doing this the old way?

Take the hour. Learn gRPC. You’ll save days (maybe months) down the line and you will look like a budget saving cape wearer. If nothing else, you’ll get to smugly drop “we’re running over HTTP/2 with protobuf contracts” into your next architecture review. That alone might be worth the price of admission.